Deep learning is a subset of machine learning that has gained tremendous popularity in recent years due to its ability to learn from data and make predictions or decisions without being explicitly programmed to do so. It is based on the concept of artificial neural networks (ANNs), which are modeled on the structure and function of the human brain. Deep learning has enabled breakthroughs in a wide range of applications, including image and speech recognition, natural language processing, autonomous driving, and medical diagnosis, among others.

In this article, we will explore the basics of deep learning, including its history, architecture, and training process. We will also discuss its applications, limitations, and future prospects. you can also read Aritificial inteligent article.

History of Deep Learning

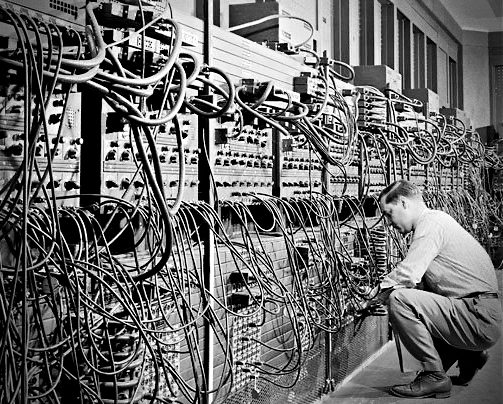

The history of deep learning can be traced back to the 1940s, when the first artificial neural network was proposed by Warren McCulloch and Walter Pitts. They showed that a simple mathematical model of a neuron could perform logical functions and that these neurons could be connected together to form a network. However, early neural networks were limited in their capabilities and were not considered to be practical tools for solving real-world problems.

It was not until the 1980s that the first successful deep learning algorithm was developed by Geoffrey Hinton, called the backpropagation algorithm. Backpropagation is a technique used to train artificial neural networks by propagating errors or differences between predicted and actual outputs backwards through the network, enabling the network to adjust its weights and learn from the data. This algorithm enabled the training of multi-layer neural networks, which were previously considered too complex to train.

In the 1980s and 1990s, deep learning was still a relatively niche field, with limited applications and few success stories. One of the main challenges was the lack of data and computing power needed to train large neural networks. The available datasets were small and limited in scope, and the available hardware was not powerful enough to train large networks.

In the early 2000s, deep learning fell out of favor due to the lack of progress and the high cost of research and development. Researchers shifted their focus to other areas of machine learning, such as support vector machines and decision trees, which were considered more practical and effective for many applications.

However, with the advent of big data and powerful computing hardware, deep learning experienced a resurgence in the 2010s. Researchers began to collect and annotate large datasets, such as ImageNet, which contained millions of labeled images and enabled the training of large-scale convolutional neural networks (CNNs). CNNs are a type of neural network that are particularly well-suited for image recognition tasks.

The development of recurrent neural networks (RNNs) also contributed to the resurgence of deep learning. RNNs are a type of neural network that can model sequential data, such as natural language and time-series data. They are particularly well-suited for tasks such as speech recognition and machine translation.

In recent years, deep learning has become one of the most popular subfields of machine learning, with widespread applications in industry, academia, and research. It has enabled breakthroughs in a wide range of applications, including image and speech recognition, natural language processing, autonomous driving, and medical diagnosis, among others.

success of deep learning

The success of deep learning can be attributed to several factors. First, the availability of large datasets has enabled researchers to train deep neural networks with millions of parameters. Second, the availability of powerful computing hardware, such as graphics processing units (GPUs), has enabled researchers to speed up the training process and train larger and more complex networks. Finally, the development of new deep learning algorithms, such as generative adversarial networks (GANs) and deep reinforcement learning, has enabled researchers to tackle new and challenging problems.

In conclusion, the history of deep learning is a story of perseverance, innovation, and progress. From its humble beginnings in the 1940s to its resurgence in the 2010s, deep learning has become one of the most exciting and promising fields in artificial intelligence. With continued research and development, deep learning is likely to drive advances in AI and transform our world in the years to come.

Architecture of Deep Learning

The architecture of deep learning refers to the design and structure of neural networks used in deep learning. A neural network is a computational model that is inspired by the structure and function of the human brain. It consists of layers of interconnected nodes, or neurons, that process and transmit information.

The basic building block of a neural network is a neuron, which takes one or more inputs, applies a linear transformation to them, and then passes the result through a non-linear activation function. The activation function helps to introduce non-linearity into the model and allows the network to learn complex relationships between inputs and outputs.

A neural network can consist of many layers of neurons, with each layer performing a specific type of computation. The number of layers in a neural network is referred to as its depth, and networks with many layers are called deep neural networks.

The most common type of deep neural network is the feedforward neural network, which consists of an input layer, one or more hidden layers, and an output layer. The input layer receives the raw input data, such as an image or a sequence of words, and passes it through the network to produce an output, such as a classification or prediction.

Convolutional neural networks (CNNs)

Convolutional neural networks (CNNs) are a type of neural network that are particularly well-suited for image recognition tasks. They use a special type of layer called a convolutional layer, which applies a series of filters to the input data to extract features at different levels of abstraction. The output of the convolutional layer is then passed through one or more fully connected layers to produce the final output.

Recurrent neural networks (RNNs)

Recurrent neural networks (RNNs) are a type of neural network that are particularly well-suited for sequential data, such as natural language or time-series data. They use a special type of neuron called a recurrent neuron, which allows information to be passed from one time step to the next. This makes RNNs particularly effective for tasks such as speech recognition and machine translation.

Long short-term memory (LSTM)

Long short-term memory (LSTM) networks are a type of RNN that are designed to address the problem of vanishing gradients, which can occur when training deep neural networks. LSTM networks use a special type of neuron called an LSTM cell, which allows information to be stored and retrieved over long periods of time.

Generative adversarial networks (GANs)

Generative adversarial networks (GANs) are a type of neural network that are used for generating new data that is similar to the training data. GANs consist of two networks: a generator network, which generates new data, and a discriminator network, which tries to distinguish between the generated data and the real data. The generator network is trained to produce data that is indistinguishable from the real data, while the discriminator network is trained to become better at distinguishing between the two.

In conclusion, the architecture of deep learning refers to the design and structure of neural networks used in deep learning. Different types of neural networks are used for different types of tasks, such as image recognition, natural language processing, and data generation. The choice of architecture depends on the specific task at hand and the characteristics of the input data.

Training Process of Deep Learning

The training process of deep learning involves exposing the neural network to a dataset and adjusting its internal parameters, or weights, to minimize the difference between its predicted output and the actual output. This is achieved through a process called backpropagation, where the error in the output is propagated backwards through the network to adjust the weights of the neurons.

The training process requires a large amount of labeled data, where each data point is associated with a known output. The labeled data is divided into training, validation, and test sets. The training set is used to train the network, while the validation set is used to tune the hyperparameters of the network, such as the learning rate and the number of hidden layers. The test set is used to evaluate the performance of the network on unseen data.

Applications of Deep Learning

Deep learning has been successfully applied to a wide range of applications, including image and speech recognition, natural language processing, autonomous driving, and medical diagnosis.

image recognition

deep learning algorithms can learn to recognize objects, faces, and scenes with high accuracy, even in complex and cluttered environments. This has enabled applications such as self-driving cars, facial recognition systems, and image search engines.

speech recognition

deep learning algorithms can transcribe speech into text with high accuracy, enabling voice assistants and other speech-based applications. This has enabled applications such as Siri, Alexa, and Google Assistant.

natural language processing

deep learning algorithms can understand and generate human-like language, enabling chatbots and other conversational interfaces. This has enabled applications such as customer service chatbots, virtual assistants, and language translation systems.

autonomous driving

deep learning algorithms can analyze sensor data from cameras, lidars, and radars to detect and avoid obstacles, navigate roads, and make driving decisions. This has enabled applications such as self-driving cars and delivery robots.

medical diagnosis

deep learning algorithms can analyze medical images, such as X-rays and MRIs, to detect and classify diseases with high accuracy, potentially saving lives and reducing healthcare costs. This has enabled applications such as automated screening for breast cancer and skin cancer.

Limitations and Challenges of Deep Learning

Despite its many successes, deep learning also has some limitations andchallenges. One of the main challenges is the need for large amounts of labeled data for training. Labeled data is data that has been manually annotated with the correct output, such as a label or a category. Collecting and labeling large amounts of data can be time-consuming and expensive, especially for specialized or rare tasks.

Another challenge is the need for powerful hardware, such as graphics processing units (GPUs), to train and run deep learning models. Deep learning models can be computationally intensive and require large amounts of memory, making them difficult to run on low-end devices.

Another challenge is the lack of interpretability of deep learning models. Deep learning models are often considered “black boxes” because it is difficult to understand how they arrive at their predictions or decisions. This can be a problem in applications where transparency and accountability are important, such as healthcare and law enforcement.

Future Prospects

Despite these challenges, deep learning is likely to continue to drive advances in AI and transform our world in the years to come. One area of research is the development of more efficient deep learning algorithms that require less data and less computational power. Another area is the development of more interpretable deep learning models that can explain their predictions or decisions.

Another area of research is the development of deep learning models that can learn from multiple modalities, such as images, text, and speech, to solve more complex problems. This is known as multi-modal learning and has the potential to revolutionize many fields, such as robotics, healthcare, and finance.

Another area of research is the development of deep learning models that can learn from unstructured data, such as audio, video, and text. This is known as unsupervised learning and has the potential to enable machines to learn from raw data without the need for human labeling.

Finally, there is growing interest in the development of deep learning models that can learn from limited amounts of data, known as few-shot learning or one-shot learning. This has the potential to enable machines to learn new tasks with just a few examples, similar to how humans learn.

conclusion

deep learning is a powerful and versatile tool for solving a wide range of AI problems. It has enabled breakthroughs in image and speech recognition, natural language processing, autonomous driving, and medical diagnosis, among others. Its ability to learn from large amounts of data and perform feature extraction automatically has made it a popular choice for many applications. However, deep learning also has some limitations and challenges, such as the need for large amounts of labeled data and powerful hardware. Despite these challenges, deep learning is likely to continue to drive advances in AI and transform our world in the years to come.